Dropping High-Cardinality Dimensions

Overview

High-cardinality dimensions can significantly increase telemetry costs and complexity, particularly when sending data to third-party observability platforms. By using a Dimension Drop Rule, you can selectively remove these costly, high-cardinality tags/dimensions from your telemetry before it reaches your storage or analysis systems. This helps control expenses, reduce data noise, and ensure that your telemetry pipeline remains both efficient and cost-effective.

What Does This Rule Do?

The Dimension Drop Rule identifies one or more dimensions (also known as tags or labels) in your incoming telemetry and removes them before the data is exported or aggregated. This is especially useful if you’re working with a vendor like Datadog or another per-metric or per-tag pricing model, as it can help prevent ballooning costs and complexity arising from metrics with too many unique labels.

For example:

- Remove host-specific tags that produce a massive cardinality explosion (e.g.,

host_id). - Drop user or session-based attributes that create one unique series per user or session, leading to cost spikes.

- Clean up noisy dimensions that add no analytical value.

Key Benefits

- Cost Control: By dropping high-cardinality dimensions at the source, you limit the explosion of unique metric series, which can significantly reduce expenses associated with data ingestion and storage on platforms like Datadog.

- Data Simplicity: Filtering out overly granular tags helps you focus on the most meaningful and actionable dimensions. This leads to simpler dashboards, queries, and alerts.

- Performance Optimization: With fewer unique tag combinations, back-end processing, querying, and indexing become more efficient.

- Compliance and Privacy: If some dimensions contain sensitive information or personally identifiable data, removing them ensures that this data never leaves your controlled environment.

Options and Parameters

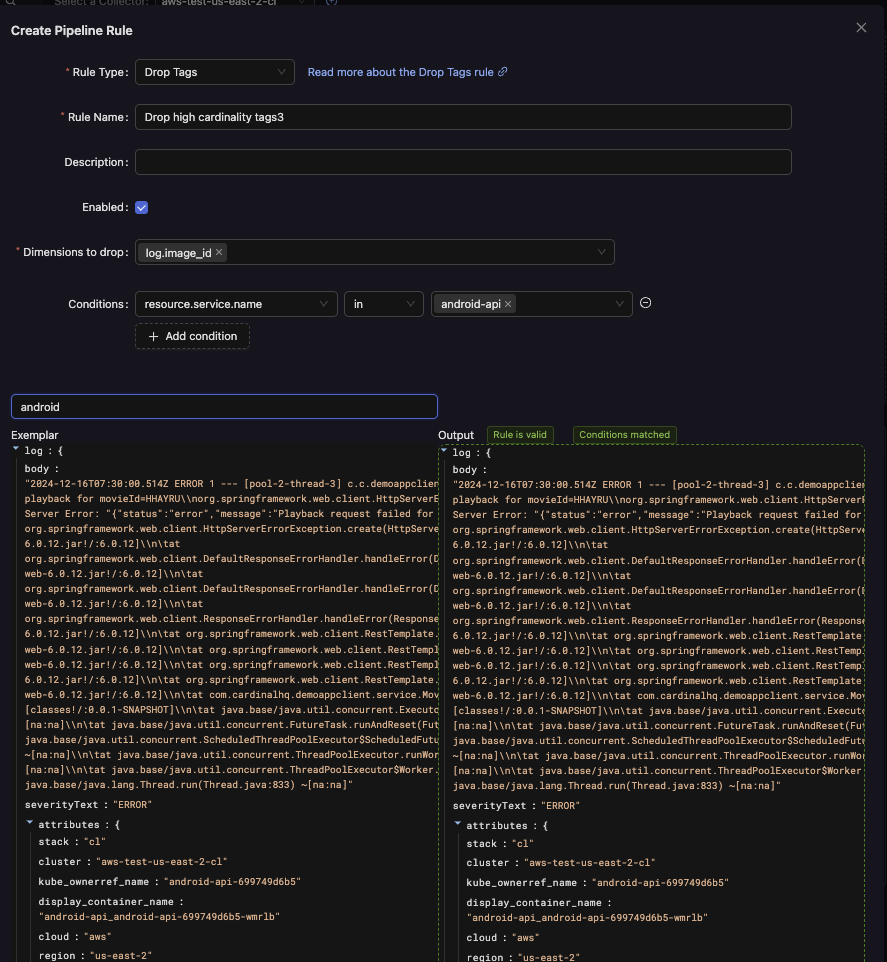

Example:

Dimensions to Drop

Select one or more dimensions to remove from your telemetry data. These can be chosen from a list of available dimensions that currently exist in your telemetry streams.

- Tags Mode: Using

tagsmode, you can type in dimension names directly or select from suggested options. - Bulk Edits: Add multiple dimensions at once to quickly clean up your telemetry.

Conditions (Optional)

Apply conditions to refine when dimensions should be dropped. For instance, you may only want to remove high-cardinality dimensions for a specific service, environment, or data center. Using conditions:

-

Example Condition:

attributes["service.name"] = "edge-service"This ensures that the dimension removal only applies to telemetry fromedge-service, leaving other services untouched. -

Multiple Conditions: Specify additional conditions to create highly targeted rules. All specified conditions must be met for the dimensions to be dropped.

Example Configuration

Goal: Reduce metrics costs by removing the session_id dimension—an extremely high-cardinality field—only for the front-end service in the production environment.

Configuration Steps:

- Dimensions to Drop:

session_id - Conditions:

attributes[“service.name”] = “front-end”

attributes[“environment”] = “production”Result: All telemetry from the front-end service in the production environment will have session_id removed before being exported. This greatly reduces metric cardinality and associated costs in your monitoring platform.

Testing and Verification

Before finalizing the rule, test it with sample data to ensure:

- Only the intended dimensions are removed.

- The telemetry still makes sense and provides valuable insights without these tags.

- Your dashboards and alerts are still functional and not negatively impacted by the change.

Adjust or refine conditions as needed to strike the right balance between cost savings and data utility.

Summary

The Dimension Drop Rule empowers you to manage and reduce high-cardinality telemetry data at the source. By selectively removing costly tags and labels, you can control expenses, simplify your datasets, and ensure that your observability strategy remains both effective and economical, especially when working with third-party vendors that charge based on data volume and cardinality.