Filter Rules

Overview

Filter rules enable you to completely remove certain telemetry data from the pipeline based on specified conditions. With filter rules, you can prevent unwanted data—such as noise, sensitive information, or irrelevant events—from ever reaching your storage or analysis systems. This not only helps maintain cleaner datasets but also optimizes resource usage by filtering out data before it’s processed and stored.

What Does This Rule Do?

A filter rule continuously evaluates incoming telemetry (logs, metrics, traces, etc.) against a set of conditions. If the telemetry data meets all the specified conditions, that data is dropped entirely from the pipeline. Unlike masking or transformation rules, which alter the data, a filter rule simply prevents it from continuing through the pipeline altogether.

For example:

- Drop all logs originating from a certain host or environment to reduce noise.

- Filter out metrics related to a deprecated service.

- Prevent events containing sensitive information from being stored.

Key Features

- No Partial Modification: Unlike other rules, filter rules do not mask or transform data; they only remove it.

- Custom Conditions: Precisely define the conditions under which telemetry should be filtered out.

- Flexible Field Selection: Apply conditions to any attribute, dimension, or field available within your telemetry data.

- Improved Resource Management: By filtering data early, you save on storage and processing costs and improve overall system performance.

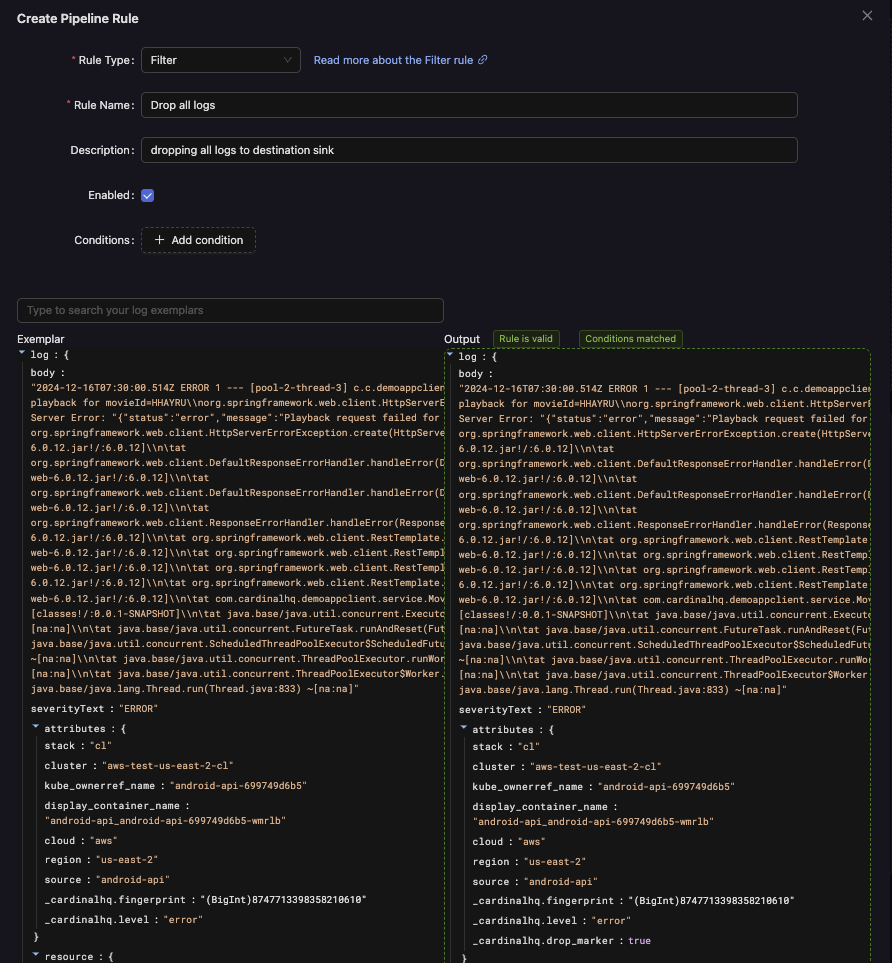

Options and Parameters

Example:

Conditions

Conditions determine when to drop data. Each condition is typically a comparison operation involving a telemetry field (e.g., attributes.environment, resource.service.name) and a target value or pattern. Examples might include:

resource["service.name"] = "unused-service"attributes["http.status_code"] = "404"attributes["environment"] = "dev"

You can add multiple conditions. When multiple conditions are specified, all conditions must be met for the data to be filtered out.

Telemetry Type Considerations

You can apply these rules to different telemetry types (e.g., logs, metrics, traces). While the mechanics are generally the same, keep in mind:

- Logs: Filter out unwanted logs based on attributes or resource fields.

- Metrics: Remove entire metric data points if they match certain conditions.

- Traces/Spans: Exclude specific spans that meet designated criteria.

Example Configuration

Goal: Drop all telemetry related to a deprecated service called old-service.

Steps:

- Add a condition:

resource[“service.name”] = “old-service”- No other conditions are required. This rule now filters out all telemetry from

old-service.

Result: All data from old-service is dropped from the pipeline, freeing resources and ensuring that irrelevant or deprecated telemetry data does not clutter your analysis.

Testing and Verification

Before finalizing your filter rule, test it against sample data to verify:

- That only the intended telemetry items are being dropped.

- That critical data is not inadvertently filtered out.

If testing reveals that too much or too little data is filtered, adjust your conditions accordingly and retest.

Summary

Filter rules offer a straightforward yet powerful means to control which telemetry data passes through your pipeline. By precisely defining conditions, you can streamline data sets, focus on what matters most, and minimize the storage and processing overhead of unneeded or noisy telemetry data.